Projects

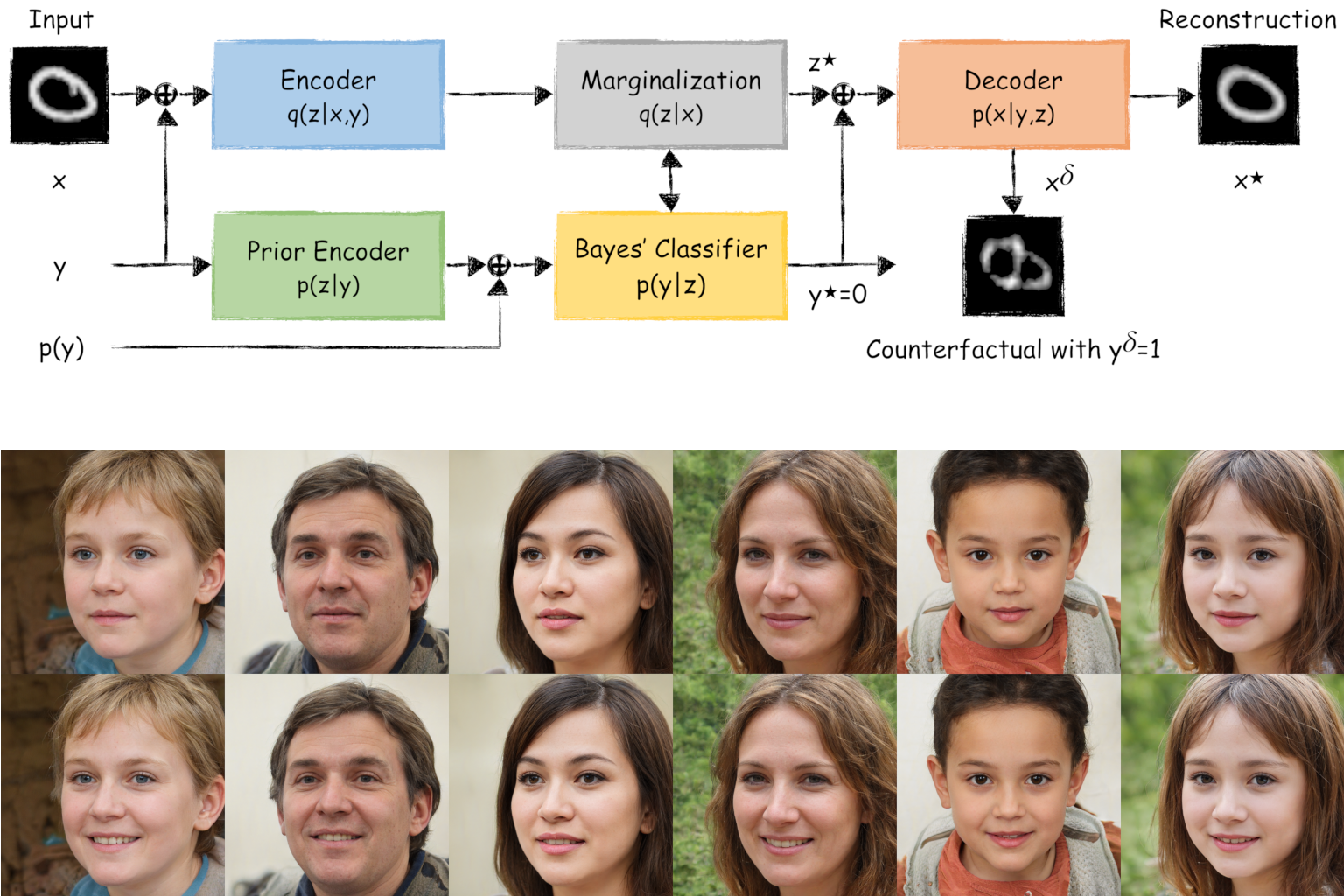

The Gaussian Discriminant Variational Autoencoder (GdVAE) - A Self-Explainable Model with Counterfactual Explanations 2024-10-01

We introduce the GdVAE, a self-explainable model that employs transparent prototypes in a white-box classifier. Alongside class predictions, we provide counterfactual explanations.

Self-explainable Model Counterfactual Explanation Manifold Traversal Generative Models Variational Autoencoder

Project Site

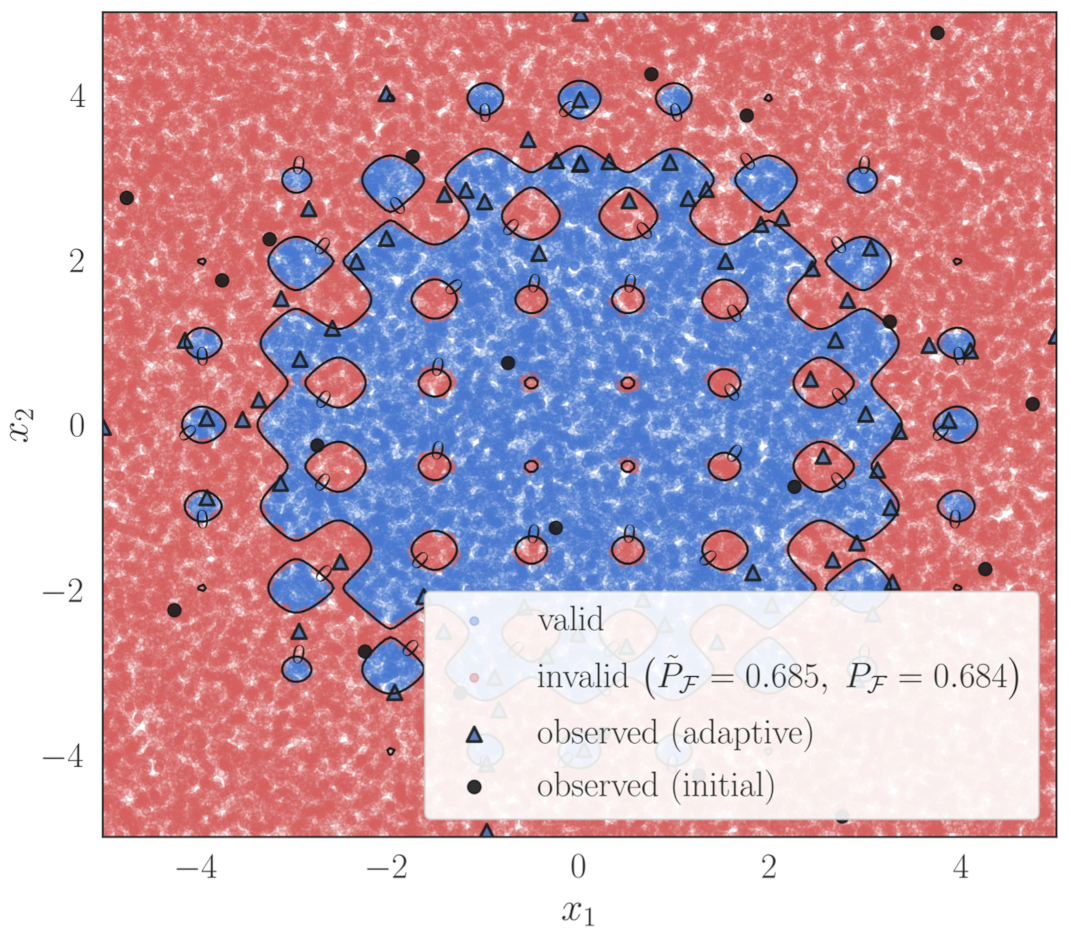

Quantifying Local Model Validity using Active Learning 2024-07-15

Machine learning models in real-world applications must often meet regulatory standards, requiring low approximation errors. Global metrics are too insensitive, and local validity checks are costly. This method learns model error to estimate local validity efficiently using active learning, requiring less data. It demonstrates better sensitivity to local validity changes and effective error modeling with minimal data.

Neural Networks Uncertainty Quantification Gaussian Processes Limit State Model Validity Active Learning

Project Site

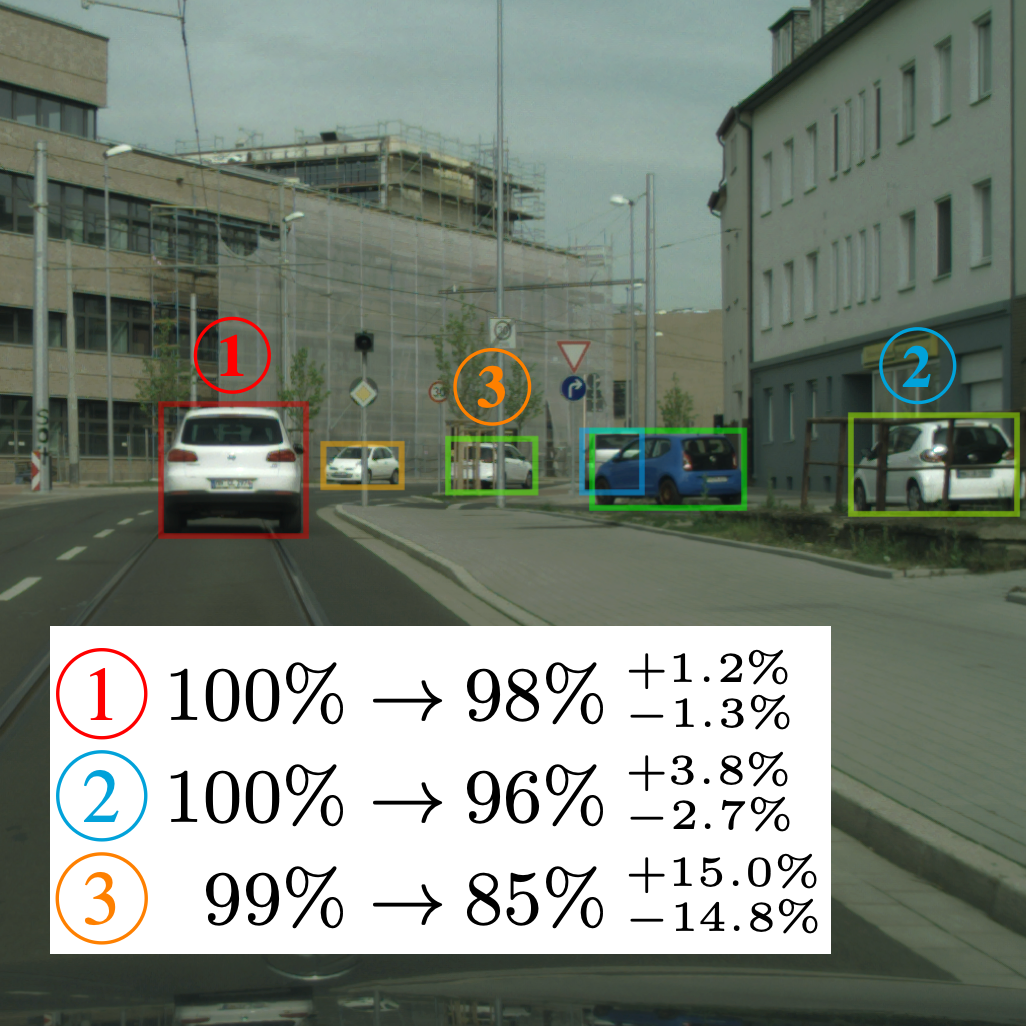

Parametric and Multivariate Uncertainty Calibration for Regression and Object Detection 2022

We inspect the calibration properties of common detection networks and extend state-of-the-art recalibration methods. Our methods use a Gaussian process (GP) recalibration scheme that yields parametric distributions as output (e.g. Gaussian or Cauchy). The usage of GP recalibration allows for a local (conditional) uncertainty calibration by capturing dependencies between neighboring samples.

Neural Networks Uncertainty Calibration Regression Calibration

Project Site

Bayesian Confidence Calibration for Epistemic Uncertainty Modelling 2021

We introduce Bayesian confidence calibration - a framework to obtain calibrated confidence estimates in conjunction with an uncertainty of the calibration method. We use stochastic variational inference to build a calibration mapping that outputs a probability distribution rather than a single calibrated estimate. Using this approach, we achieve state-of-the-art calibration performance for object detection calibration.

Neural Networks Uncertainty Calibration

Project Site

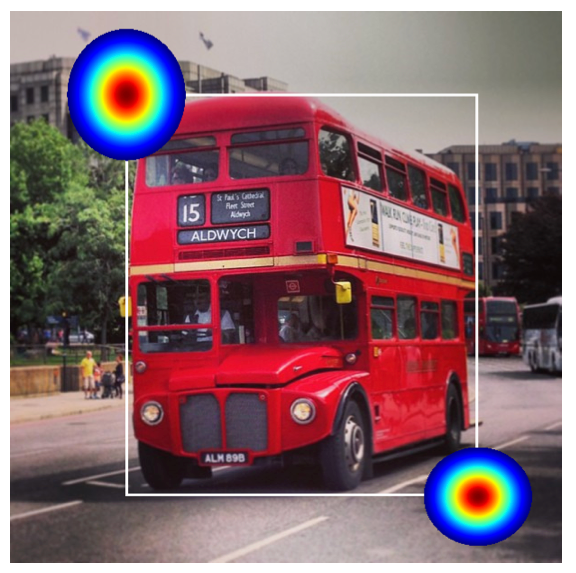

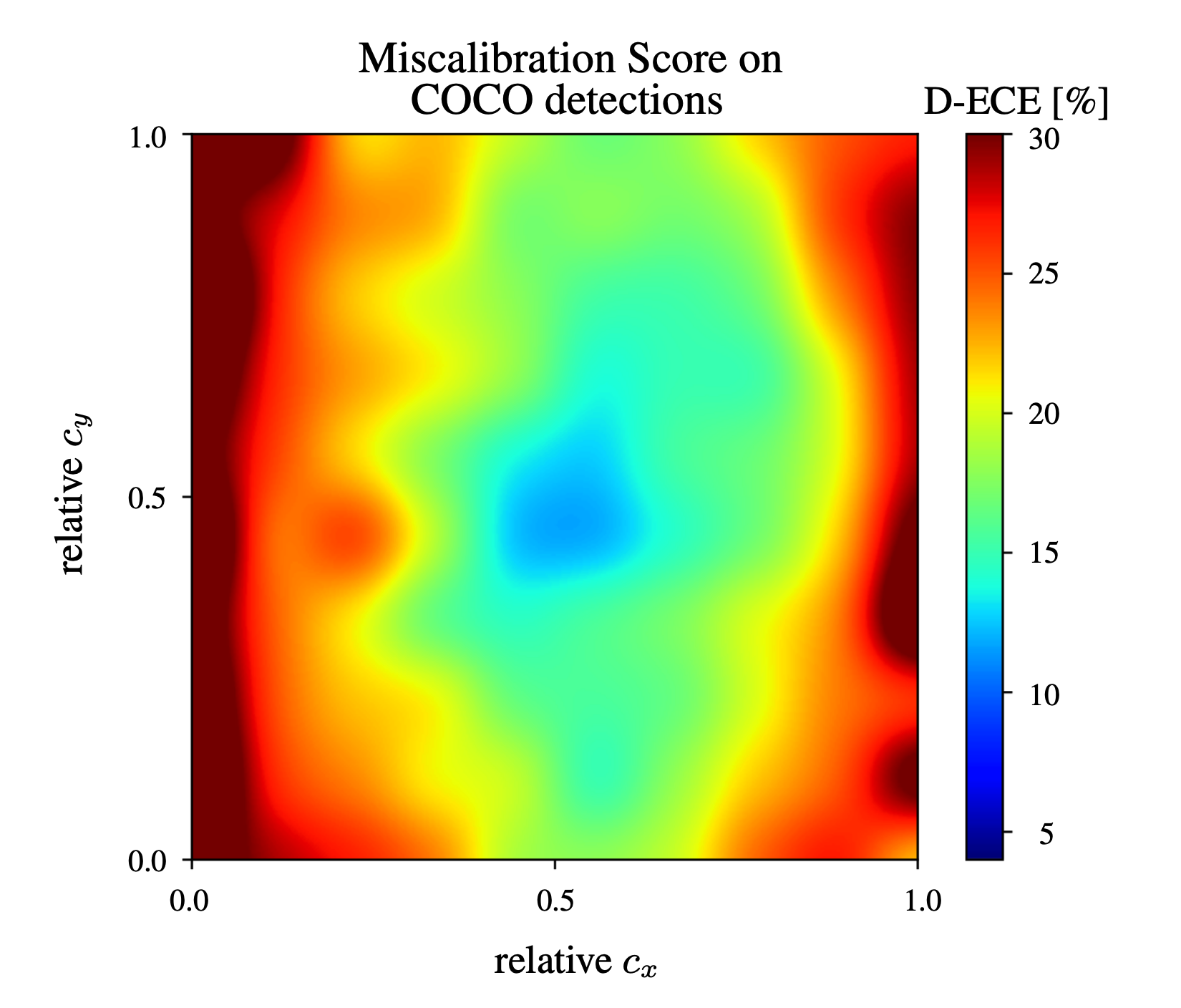

Multivariate Confidence Calibration for Object Detection 2020

We present a novel framework to measure and calibrate biased (or miscalibrated) confidence estimates of object detection methods. The main difference to related work in the field of classifier calibration is that we also use additional information of the regression output of an object detector for calibration. Our approach allows, for the first time, to obtain calibrated confidence estimates with respect to image location and box scale.

Neural Networks Uncertainty Calibration

Project Site