Anselm Haselhoff

Professor

I am a Professor of Vehicle Information Technology at the Computer Science Institute of Ruhr West University of Applied Sciences, specialized in machine learning, computer vision, and trustworthy AI.

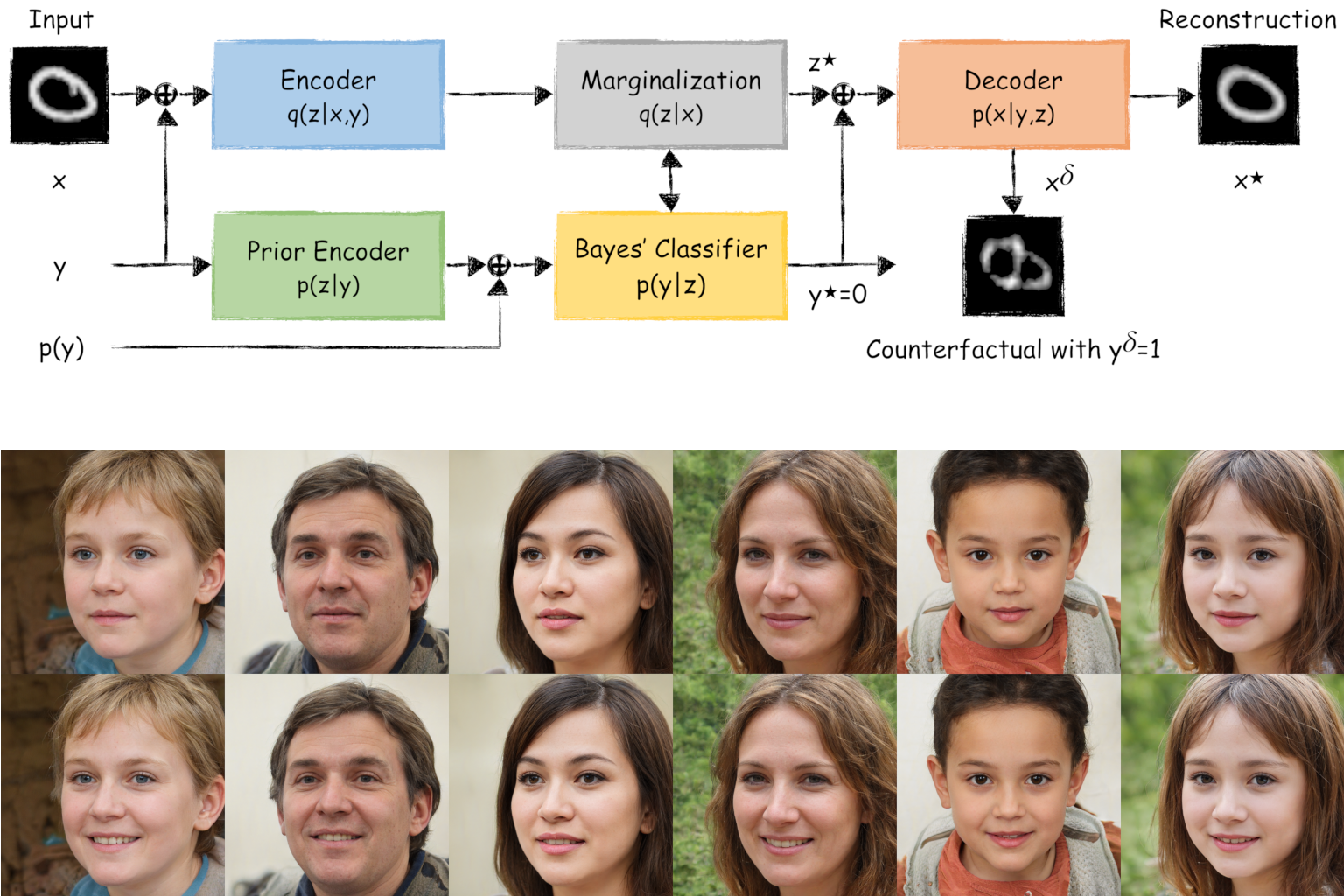

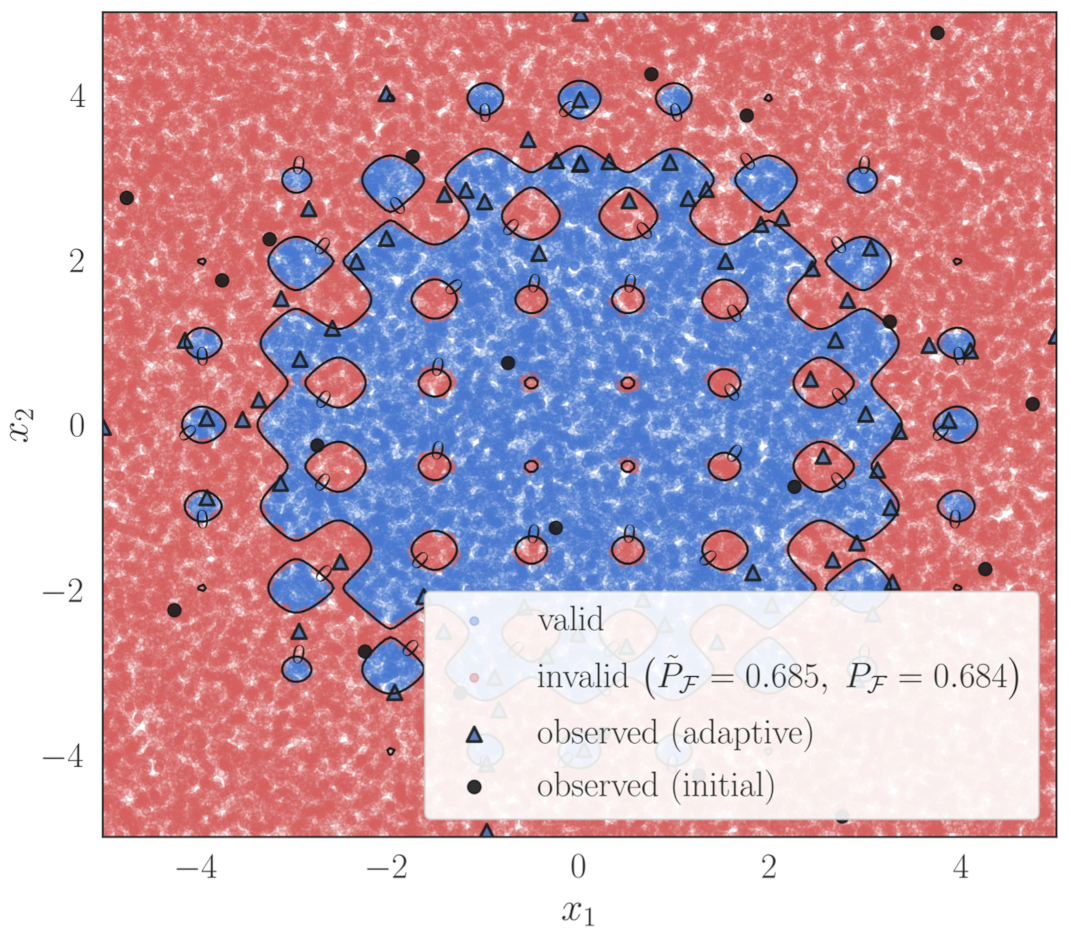

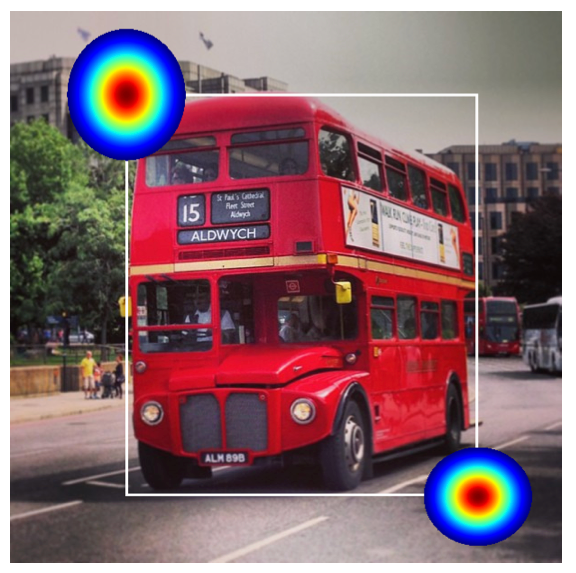

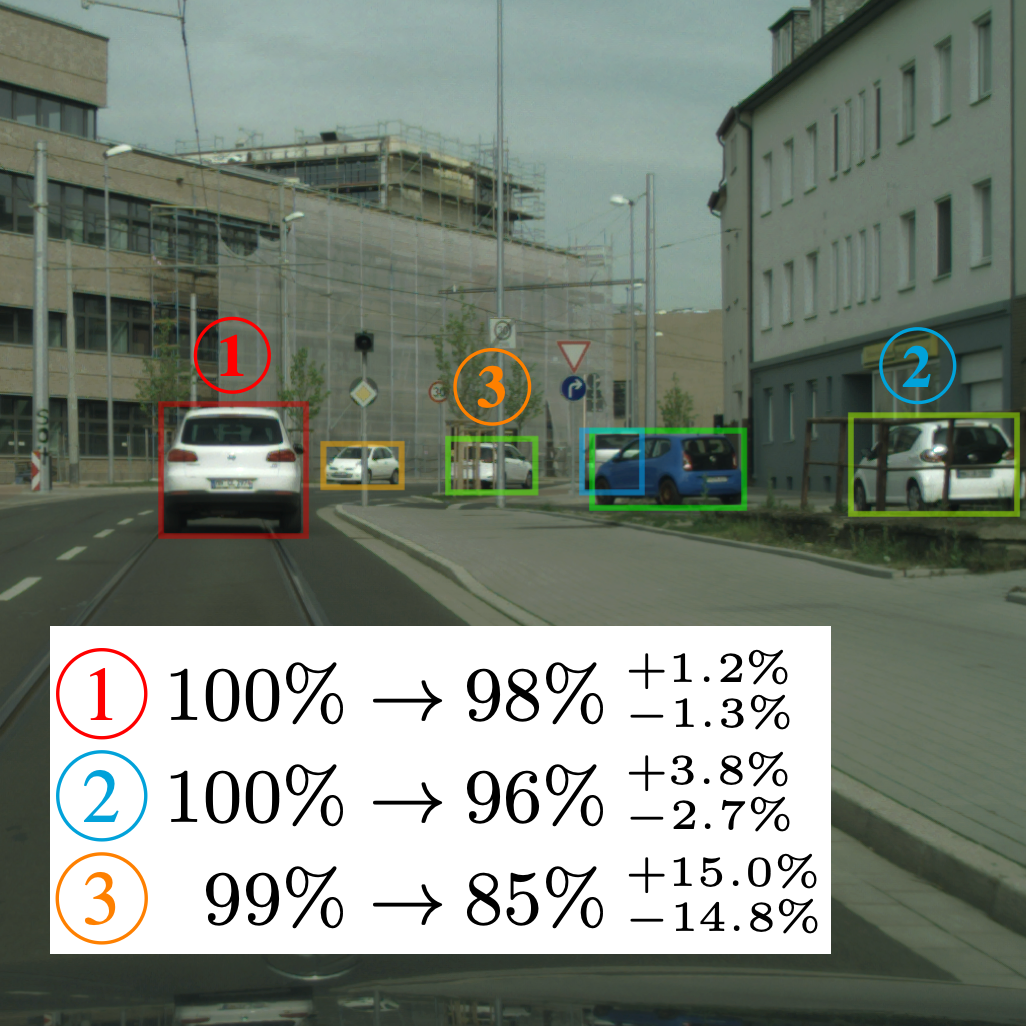

Interests: Machine Learning, Computer Vision, Deep Learning, Explainable Machine Learning, Autonomous Driving, Sensor Data Fusion, Object Detection and Tracking, Probabilistic and Generative Models